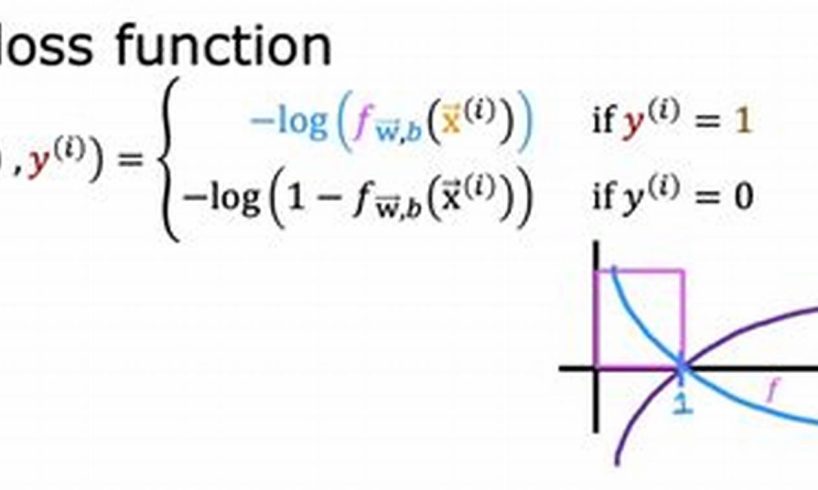

Logistic regression is a statistical model that is used to predict the probability of an event occurring. It is a type of generalized linear model (GLM) that uses a logistic function to model the relationship between the independent variables and the dependent variable. Logistic loss is a measure of the difference between the predicted probability and the actual outcome. It is used to evaluate the performance of a logistic regression model.

Logistic loss is a useful metric for evaluating the performance of a logistic regression model because it provides a measure of how well the model is able to predict the probability of an event occurring. A lower logistic loss indicates that the model is performing better. Logistic loss can also be used to compare the performance of different logistic regression models. This can be helpful for determining which model is best suited for a particular application.

Logistic regression is a powerful tool for predicting the probability of an event occurring. It is a relatively simple model to implement and it can be used to model a wide variety of data. Logistic loss is a useful metric for evaluating the performance of a logistic regression model and it can be used to compare the performance of different models.

1. Binary classification

Binary classification is a type of machine learning task in which the goal is to predict the probability of an event occurring. It is a common task in a variety of applications, such as fraud detection, medical diagnosis, and customer churn prediction.

Logistic regression is a popular algorithm for binary classification. It is a relatively simple algorithm to implement and it can be used to model a wide variety of data. Logistic loss is a measure of the difference between the predicted probability and the actual outcome in a logistic regression model. It is used to evaluate the performance of the model.

The connection between binary classification and logistic loss is that logistic loss is a measure of the performance of a logistic regression model on a binary classification task. A lower logistic loss indicates that the model is performing better. Logistic loss can be used to compare the performance of different logistic regression models on a binary classification task.

Binary classification is an important component of logistic regression. It is used to evaluate the performance of the model and to identify areas for improvement. By understanding the connection between binary classification and logistic loss, you can improve the performance of your logistic regression models on binary classification tasks.

2. Probability estimation

Logistic regression is a statistical model that is used to predict the probability of an event occurring. It is a type of generalized linear model (GLM) that uses a logistic function to model the relationship between the independent variables and the dependent variable. Logistic loss is a measure of the difference between the predicted probability and the actual outcome. It is used to evaluate the performance of a logistic regression model.

The connection between probability estimation and logistic loss is that logistic loss is a measure of the performance of a logistic regression model on a probability estimation task. A lower logistic loss indicates that the model is performing better. Logistic loss can be used to compare the performance of different logistic regression models on a probability estimation task.

Probability estimation is an important component of logistic regression. It is used to evaluate the performance of the model and to identify areas for improvement. By understanding the connection between probability estimation and logistic loss, you can improve the performance of your logistic regression models on probability estimation tasks.

3. Model evaluation

Model evaluation is the process of assessing the performance of a machine learning model. It is an important step in the machine learning process, as it allows you to determine how well your model is performing and identify areas for improvement. Logistic loss is a measure of the difference between the predicted probability and the actual outcome in a logistic regression model. It is a common metric for evaluating the performance of logistic regression models.

The connection between model evaluation and logistic loss is that logistic loss is a useful metric for evaluating the performance of logistic regression models. A lower logistic loss indicates that the model is performing better. Logistic loss can be used to compare the performance of different logistic regression models and to identify the best model for a particular task.

For example, suppose you are building a logistic regression model to predict the probability of a customer churning. You can use logistic loss to evaluate the performance of your model and to compare it to other models. The model with the lowest logistic loss is the best model for predicting customer churn.

Model evaluation is an important part of the machine learning process. It allows you to determine how well your model is performing and identify areas for improvement. Logistic loss is a useful metric for evaluating the performance of logistic regression models. By understanding the connection between model evaluation and logistic loss, you can improve the performance of your machine learning models.

4. Optimization

Optimization is the process of finding the best possible solution to a problem. In the context of erm with logistic loss, optimization is used to find the values of the model parameters that minimize the logistic loss. This is important because it allows us to build models that make accurate predictions.

- Gradient descent is a common optimization algorithm used to minimize logistic loss. Gradient descent works by iteratively moving the model parameters in the direction of the negative gradient of the logistic loss function. This process continues until the gradient is zero, which indicates that the model parameters are at the optimal values.

- Regularization is a technique that can be used to improve the performance of logistic regression models. Regularization works by adding a penalty term to the logistic loss function. This penalty term encourages the model parameters to be small, which helps to prevent overfitting. Overfitting occurs when a model is too complex and learns the training data too well, which can lead to poor performance on new data.

- Cross-validation is a technique that can be used to evaluate the performance of logistic regression models. Cross-validation works by dividing the training data into multiple subsets. The model is then trained on each subset of the data, and the performance of the model is evaluated on the remaining data. This process is repeated for each subset of the data, and the average performance of the model is calculated. Cross-validation can be used to estimate the generalization error of a model, which is the error that the model is expected to make on new data.

Optimization is an important part of the erm with logistic loss process. By understanding the different optimization techniques that are available, you can improve the performance of your logistic regression models.

5. Regularization

Regularization is a technique used in erm with logistic loss to improve the performance of logistic regression models. Logistic regression is a statistical model that is used to predict the probability of an event occurring. It is a type of generalized linear model (GLM) that uses a logistic function to model the relationship between the independent variables and the dependent variable. Logistic loss is a measure of the difference between the predicted probability and the actual outcome. It is used to evaluate the performance of a logistic regression model.

Regularization works by adding a penalty term to the logistic loss function. This penalty term encourages the model parameters to be small. This helps to prevent overfitting, which occurs when a model is too complex and learns the training data too well. Overfitting can lead to poor performance on new data.

There are several different types of regularization techniques. The most common type of regularization is L2 regularization. L2 regularization adds a penalty term to the logistic loss function that is proportional to the squared sum of the model parameters. This penalty term encourages the model parameters to be small, which helps to prevent overfitting.

Regularization is an important part of erm with logistic loss. By understanding how regularization works, you can improve the performance of your logistic regression models.

Here is an example of how regularization can be used to improve the performance of a logistic regression model. Suppose you are building a logistic regression model to predict the probability of a customer churning. You can use regularization to prevent the model from overfitting the training data. This will help the model to make more accurate predictions on new data.

Regularization is a powerful technique that can be used to improve the performance of logistic regression models. By understanding how regularization works, you can improve the performance of your machine learning models.

6. Overfitting

In the context of erm with logistic loss, overfitting occurs when a logistic regression model is too complex and learns the training data too well. This can lead to poor performance on new data, as the model may not be able to generalize to new data that is different from the training data.

- Regularization

Regularization is a technique that can be used to prevent overfitting in logistic regression models. Regularization works by adding a penalty term to the logistic loss function. This penalty term encourages the model parameters to be small, which helps to prevent overfitting.

- Cross-validation

Cross-validation is a technique that can be used to evaluate the performance of logistic regression models and to identify overfitting. Cross-validation works by dividing the training data into multiple subsets. The model is then trained on each subset of the data, and the performance of the model is evaluated on the remaining data. This process is repeated for each subset of the data, and the average performance of the model is calculated. Cross-validation can be used to estimate the generalization error of a model, which is the error that the model is expected to make on new data.

- Early stopping

Early stopping is a technique that can be used to prevent overfitting in logistic regression models. Early stopping works by stopping the training process early, before the model has a chance to overfit the training data. The optimal stopping point is determined using a validation set, which is a separate dataset that is not used to train the model.

- Feature selection

Feature selection is a technique that can be used to reduce the number of features in a logistic regression model. This can help to prevent overfitting, as a model with fewer features is less likely to overfit the training data.

Overfitting is a common problem in machine learning. By understanding the different techniques that can be used to prevent overfitting, you can improve the performance of your logistic regression models.

7. Underfitting

In the context of erm with logistic loss, underfitting occurs when a logistic regression model is too simple and does not capture the complexity of the training data. This can lead to poor performance on new data, as the model may not be able to generalize to new data that is different from the training data.

Underfitting is a common problem in machine learning, and it is important to be aware of the signs of underfitting so that you can take steps to prevent it. Some of the signs of underfitting include:

- The model has a high bias error. Bias error is the error that is caused by the model’s inability to capture the complexity of the training data.

- The model has a low variance error. Variance error is the error that is caused by the model’s sensitivity to the training data.

- The model performs poorly on new data.

There are a number of things that you can do to prevent underfitting in your logistic regression models. Some of these things include:

- Use a more complex model.

- Use a larger training dataset.

- Use regularization.

- Use feature selection.

By understanding the causes and signs of underfitting, you can take steps to prevent it and improve the performance of your logistic regression models.

8. Cross-validation

Cross-validation is a resampling technique used to evaluate machine learning models. It involves partitioning the training data into multiple subsets, training the model on different combinations of these subsets, and evaluating the model’s performance on the remaining data. This process is repeated multiple times, with the results averaged to provide an estimate of the model’s generalization error.

- Data partitioning

In cross-validation, the training data is typically divided into k equally sized subsets, or folds. One fold is held out as the test set, while the remaining k-1 folds are used to train the model. This process is repeated k times, with each fold serving as the test set once.

- Model training and evaluation

For each fold, the model is trained on the k-1 training folds and evaluated on the held-out test fold. The performance of the model on the test fold is recorded, and this process is repeated for each fold.

- Performance estimation

The performance of the model is estimated by averaging the performance measures across all k folds. This provides an estimate of the model’s generalization error, which is the error that the model is expected to make on new, unseen data.

- Hyperparameter tuning

Cross-validation can also be used to tune the hyperparameters of a machine learning model. Hyperparameters are parameters that control the learning process, such as the learning rate or the regularization parameter. By performing cross-validation for different values of the hyperparameters, the optimal values can be identified.

Cross-validation is an important tool for evaluating and tuning machine learning models. It provides an estimate of the model’s generalization error, which is essential for understanding how well the model will perform on new data. Cross-validation can also be used to identify the optimal values of the model’s hyperparameters.

Frequently Asked Questions about Logistic Regression with Logistic Loss

Logistic regression with logistic loss is a statistical technique used to model the probability of an event occurring. It is widely used in machine learning for tasks such as binary classification and probability estimation.

Question 1: What is the difference between logistic regression and logistic loss?

Answer: Logistic regression is a statistical model used to predict the probability of an event occurring, while logistic loss is a measure of the difference between the predicted probability and the actual outcome. Logistic loss is used to evaluate the performance of a logistic regression model.

Question 2: When should I use logistic regression with logistic loss?

Answer: Logistic regression with logistic loss is well-suited for tasks where the goal is to predict the probability of an event occurring, such as binary classification or probability estimation.

Question 3: How do I interpret the coefficients in a logistic regression model with logistic loss?

Answer: The coefficients in a logistic regression model with logistic loss represent the change in the log-odds of the event occurring for a one-unit increase in the corresponding predictor variable, holding all other variables constant.

Question 4: What are the advantages of using logistic regression with logistic loss?

Answer: Logistic regression with logistic loss is relatively easy to interpret, can handle both continuous and categorical predictor variables, and can be used to model non-linear relationships. Additionally, logistic loss is a convex function, which makes it relatively easy to optimize.

Question 5: What are the limitations of using logistic regression with logistic loss?

Answer: Logistic regression with logistic loss can be sensitive to outliers, and it may not be suitable for tasks where the data is highly imbalanced. Additionally, logistic regression with logistic loss assumes that the relationship between the predictor variables and the event probability is linear on the log-odds scale.

Question 6: How can I improve the performance of a logistic regression model with logistic loss?

Answer: There are several techniques that can be used to improve the performance of a logistic regression model with logistic loss, including regularization, feature selection, and cross-validation.

Summary: Logistic regression with logistic loss is a powerful statistical technique for modeling the probability of an event occurring. It is relatively easy to interpret and can handle both continuous and categorical predictor variables. However, it is important to be aware of its limitations and to use appropriate techniques to improve its performance.

Transition to the next article section: By understanding the fundamentals of logistic regression with logistic loss, you can effectively apply it to a wide range of machine learning tasks.

Tips for Using Logistic Regression with Logistic Loss

Logistic regression is a powerful statistical technique that is widely used for tasks such as binary classification and probability estimation. By following these tips, you can improve the performance of your logistic regression models and achieve better results.

Tip 1: Understand the basics of logistic regression and logistic loss.

Before you start using logistic regression with logistic loss, it is important to understand the basics of how these techniques work. This will help you to make informed decisions about how to use these techniques and how to interpret the results.

Tip 2: Use the right data for your task.

Logistic regression with logistic loss is well-suited for tasks where the goal is to predict the probability of an event occurring. However, it is important to make sure that you have the right data for your task. The data should be representative of the population that you are interested in predicting for, and it should contain all of the relevant predictor variables.

Tip 3: Prepare your data carefully.

The quality of your data will have a significant impact on the performance of your logistic regression model. Make sure to clean your data, handle missing values, and scale your data before you start training your model.

Tip 4: Choose the right model complexity.

The complexity of your logistic regression model will affect its performance. A model that is too simple may not be able to capture the complexity of your data, while a model that is too complex may overfit your data. Use cross-validation to find the optimal model complexity.

Tip 5: Regularize your model.

Regularization is a technique that can help to improve the performance of logistic regression models. Regularization works by penalizing the model for having large coefficients. This helps to prevent overfitting and can improve the model’s generalization performance.

Tip 6: Use cross-validation.

Cross-validation is a technique that can help you to evaluate the performance of your logistic regression model and to identify areas for improvement. Cross-validation works by dividing your data into multiple subsets and then training and evaluating your model on different combinations of these subsets. This helps to provide a more accurate estimate of your model’s generalization performance.

Summary: By following these tips, you can improve the performance of your logistic regression models and achieve better results. Logistic regression is a powerful tool for modeling the probability of an event occurring, and by understanding how to use it effectively, you can harness its power to solve a wide range of problems.

Transition to the article’s conclusion: These tips will help you to get started with using logistic regression with logistic loss. With practice, you will be able to use these techniques to build powerful and accurate models.

Conclusion

Logistic regression with logistic loss is a powerful statistical technique that can be used to model the probability of an event occurring. It is a versatile technique that can be used for a wide range of tasks, including binary classification and probability estimation.

In this article, we have explored the basics of logistic regression with logistic loss, including how to use these techniques and how to interpret the results. We have also provided some tips for improving the performance of your logistic regression models. By following these tips, you can harness the power of logistic regression to solve a wide range of problems.

As we move forward, logistic regression with logistic loss will continue to be an important tool for data scientists and machine learning practitioners. By understanding how to use these techniques effectively, you can gain valuable insights from your data and make better decisions.