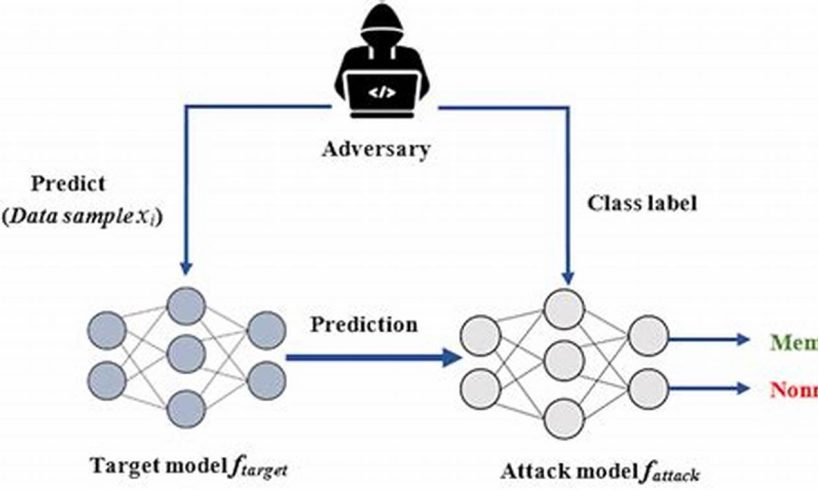

A membership inference attack on logistic regression is a type of attack where an adversary attempts to infer whether a given data point was used to train a logistic regression model. This type of attack can be used to gain insights into the training data of a model, even if the model itself is not accessible to the adversary.

Membership inference attacks are a serious concern for machine learning models, as they can be used to learn sensitive information about the training data. This information could be used to identify individuals who have been included in the training data, or to infer other sensitive information about the data.

There are a number of different techniques that can be used to perform membership inference attacks on logistic regression models. One common technique is to use a shadow model to train a model on a dataset that is similar to the training data of the target model. The shadow model can then be used to predict the class of a given data point, and if the prediction is correct, it is likely that the data point was used to train the target model.

Membership inference attacks are a powerful tool that can be used to gain insights into the training data of machine learning models. However, it is important to note that these attacks can also be used to learn sensitive information about the data, so it is important to take steps to protect against these attacks.

1. Data Privacy: Attacks can reveal sensitive information about individuals in training data.

In the context of membership inference attacks on logistic regression, this aspect highlights a critical concern: the potential exposure of sensitive personal information. These attacks exploit the model’s learned patterns to infer whether an individual’s data was used in training. This poses significant data privacy risks, as attackers could potentially identify individuals based on their sensitive attributes, such as health conditions, financial status, or political affiliations.

- Facet 1: Data Leakage

Membership inference attacks can lead to the leakage of sensitive information that was included in the training data. For example, an attacker could infer the health status of individuals based on their medical records used to train a logistic regression model for disease prediction.

- Facet 2: Privacy Violations

These attacks violate individuals’ privacy by revealing their inclusion in training datasets without their knowledge or consent. This could have severe consequences, such as discrimination or targeted advertising based on sensitive information.

- Facet 3: Model Bias

Membership inference attacks can exacerbate model bias by exposing the underlying data distribution and revealing the potential presence of sensitive attributes in the training data. This can lead to biased predictions that perpetuate societal inequalities.

These facets underscore the importance of protecting data privacy in the context of membership inference attacks on logistic regression models. It is crucial to implement robust defenses and ethical considerations to safeguard sensitive information and maintain the trust of individuals whose data is used in machine learning applications.

2. Model Security: Attacks can compromise model accuracy and integrity.

Membership inference attacks on logistic regression models pose a significant threat to model security. These attacks exploit the model’s learned patterns to infer whether an individual’s data was used in training. This can compromise the accuracy and integrity of the model, leading to erroneous predictions and biased decision-making.

One way membership inference attacks can compromise model accuracy is by exposing the model’s decision boundaries. By identifying the data points that were used to train the model, attackers can gain insights into the model’s decision-making process. This knowledge can be used to craft adversarial examples that the model will misclassify, reducing its overall accuracy.

In addition to compromising accuracy, membership inference attacks can also damage the integrity of the model. By revealing the presence of sensitive information in the training data, these attacks can erode trust in the model and its predictions. This can have far-reaching consequences, particularly in applications where the model is used to make critical decisions affecting individuals’ lives.

For example, consider a logistic regression model used to predict loan approvals. A membership inference attack could reveal that the model was trained on a dataset that included sensitive information, such as race or gender. This could lead to concerns about bias in the model’s predictions, potentially resulting in unfair or discriminatory lending practices.

Understanding the connection between membership inference attacks and model security is crucial for developing robust defenses. By implementing effective countermeasures, organizations can protect their models from these attacks and maintain the accuracy and integrity of their predictions.

3. Training Data Leakage: Attacks can expose confidential data used to train models.

In the context of membership inference attacks on logistic regression, training data leakage poses a significant threat to data confidentiality. These attacks exploit the model’s learned patterns to infer whether an individual’s data was used in training, potentially exposing sensitive information that was included in the training dataset.

- Facet 1: Data Privacy Violations

Membership inference attacks can lead to the leakage of confidential data that was used to train the model, violating individuals’ privacy. For example, an attacker could infer the financial status of individuals based on their transaction data used to train a logistic regression model for credit scoring.

- Facet 2: Model Inversion

Training data leakage can facilitate model inversion attacks, where an attacker attempts to reconstruct the training data from the model. This could lead to the exposure of sensitive information that was used to train the model.

- Facet 3: Adversarial Attacks

Leaked training data can be used to craft adversarial examples that the model will misclassify, potentially compromising the model’s security and integrity.

- Facet 4: Regulatory Compliance

Training data leakage can violate data protection regulations, such as the General Data Protection Regulation (GDPR), which imposes strict requirements on the collection, use, and disclosure of personal data.

These facets highlight the critical connection between training data leakage and membership inference attacks on logistic regression. By understanding these facets, organizations can develop robust defenses to protect the confidentiality of their training data and mitigate the risks associated with these attacks.

4. Shadow Models: Adversaries can train models to mimic target models, aiding attacks.

In the context of membership inference attacks on logistic regression models, the use of shadow models plays a significant role in aiding adversaries. By training a shadow model on a dataset that is similar to the training data of the target model, an attacker can gain insights into the target model’s decision-making process.

- Facet 1: Model Mimicry

Shadow models mimic the behavior of the target model, enabling attackers to approximate the target model’s predictions. This allows them to infer whether an individual’s data was used to train the target model, even without direct access to the target model’s training data.

- Facet 2: Data Reconstruction

Shadow models can be used to reconstruct the training data of the target model, potentially exposing sensitive information. By comparing the predictions of the shadow model with the predictions of the target model, attackers can identify data points that were likely used to train the target model.

- Facet 3: Adversarial Attacks

Shadow models can facilitate adversarial attacks on the target model. By identifying the decision boundaries of the target model, attackers can craft adversarial examples that the target model will misclassify. This can compromise the integrity and security of the target model.

- Facet 4: Model Inversion

Shadow models can be used in model inversion attacks, where an attacker attempts to recover the training data from the model. By leveraging the shadow model’s mimicry of the target model, attackers can approximate the inverse mapping from the model’s predictions to the training data.

These facets highlight the critical connection between shadow models and membership inference attacks on logistic regression models. Understanding these facets is crucial for developing robust defenses against these attacks and safeguarding the privacy of training data.

5. Overfitting: Models prone to overfitting are more vulnerable to attacks.

In the context of membership inference attacks on logistic regression models, overfitting plays a significant role in increasing the vulnerability of models to these attacks.

Overfitting occurs when a model learns the specific details of the training data too closely, compromising its ability to generalize well to new data. This can create weaknesses that attackers can exploit to infer whether an individual’s data was used to train the model.

One way overfitting makes models more vulnerable to membership inference attacks is by amplifying the model’s reliance on specific features in the training data. By identifying these features, attackers can create data points that are similar to those in the training data, increasing the likelihood that the model will classify them as belonging to the same class. This can help attackers infer whether an individual’s data was used to train the model, even if the attacker does not have access to the training data itself.

For example, consider a logistic regression model trained to predict whether a patient has a specific disease based on their medical records. If the model is overfit, it may learn to rely heavily on a particular feature, such as a specific symptom or test result. An attacker could then create a data point with similar values for that feature, increasing the likelihood that the model will classify it as belonging to the same class. This could allow the attacker to infer whether the patient’s data was used to train the model, potentially revealing sensitive health information.

Understanding the connection between overfitting and vulnerability to membership inference attacks is crucial for developing robust defenses against these attacks. By mitigating overfitting and enhancing the model’s generalization capabilities, organizations can protect their models from these attacks and maintain the privacy of their training data.

6. Dimensionality: High-dimensional data can exacerbate attack effectiveness.

In the context of membership inference attacks on logistic regression models, dimensionality plays a crucial role in amplifying the effectiveness of these attacks. High-dimensional data, characterized by a large number of features or attributes, poses significant challenges to model training and can increase the vulnerability of models to membership inference attacks.

One reason for this increased vulnerability is that high-dimensional data can lead to overfitting, as models attempt to capture complex relationships between numerous features. Overfitting makes models more susceptible to membership inference attacks, as attackers can exploit the model’s reliance on specific features to infer whether an individual’s data was used in training.

Additionally, high-dimensional data can make it easier for attackers to craft adversarial examples that evade detection by the model. By carefully selecting data points that lie near the decision boundaries of the model, attackers can increase the likelihood that the model will misclassify them. This can help attackers infer whether an individual’s data was used to train the model, even if the attacker does not have access to the training data itself.

For example, consider a logistic regression model trained to predict whether a patient has a specific disease based on their medical records. If the model is trained on high-dimensional data, it may become overfit and rely heavily on a particular set of features. An attacker could then create a data point with similar values for those features, increasing the likelihood that the model will classify it as belonging to the same class. This could allow the attacker to infer whether the patient’s data was used to train the model, potentially revealing sensitive health information.

Understanding the connection between dimensionality and the effectiveness of membership inference attacks is crucial for developing robust defenses against these attacks. By carefully managing the dimensionality of training data and employing regularization techniques to mitigate overfitting, organizations can reduce the vulnerability of their models to these attacks and maintain the privacy of their training data.

7. Defense Mechanisms: Techniques exist to mitigate attacks, but none are foolproof.

Membership inference attacks on logistic regression models pose significant challenges to data privacy and model security. While various defense mechanisms have been proposed to mitigate these attacks, it is crucial to acknowledge that no single technique is foolproof. Understanding the limitations of these defense mechanisms is essential for developing effective strategies to protect against membership inference attacks.

One common defense mechanism against membership inference attacks is data augmentation. By adding synthetic or noisy data to the training dataset, the model’s reliance on specific features can be reduced, making it more difficult for attackers to infer membership. However, data augmentation may not be effective in all cases, particularly when the attacker has access to a large amount of background knowledge about the training data.

Another defense mechanism is adversarial training, where the model is trained on adversarial examples specifically designed to evade detection. While adversarial training can improve the model’s robustness against membership inference attacks, it can also lead to a decrease in model accuracy on legitimate data. Additionally, attackers may develop more sophisticated adversarial examples that can bypass the defenses provided by adversarial training.

Differential privacy is a technique that adds noise to the model’s predictions to protect individual privacy. By introducing uncertainty into the model’s output, differential privacy can make it more difficult for attackers to infer membership. However, differential privacy can also degrade the model’s accuracy, especially when the amount of noise added is significant.

Understanding the limitations of defense mechanisms against membership inference attacks is crucial for developing robust strategies to protect data privacy and model security. By carefully considering the strengths and weaknesses of different defense mechanisms, organizations can make informed decisions about the most appropriate techniques to implement based on their specific requirements and risk tolerance.

FAQs on Membership Inference Attack on Logistic Regression

This section addresses frequently asked questions and misconceptions regarding membership inference attacks on logistic regression models.

Question 1: What is a membership inference attack?

Answer: A membership inference attack aims to determine whether a specific data point was part of the training dataset used to develop a machine learning model, such as a logistic regression model.

Question 2: Why are membership inference attacks a concern?

Answer: These attacks can compromise data privacy by revealing sensitive information about individuals whose data was used in training, potentially leading to discrimination or targeted advertising.

Question 3: How do membership inference attacks work on logistic regression models?

Answer: By analyzing the model’s predictions, attackers can identify patterns that indicate whether a data point was likely used in training. This is possible because logistic regression models make binary classifications based on learned decision boundaries.

Question 4: Are there defense mechanisms against membership inference attacks?

Answer: Yes, several defense mechanisms exist, such as data augmentation, adversarial training, and differential privacy. However, it’s important to note that no single defense is foolproof, and attackers may develop new techniques to bypass these defenses.

Question 5: What is the significance of overfitting in membership inference attacks?

Answer: Overfitting occurs when a model learns specific details of the training data too closely, making it more vulnerable to membership inference attacks. Overfitting leads to an increased reliance on particular features, which attackers can exploit to infer membership.

Question 6: How does dimensionality impact membership inference attacks?

Answer: High-dimensional data, characterized by numerous features, can exacerbate the effectiveness of membership inference attacks. It increases the likelihood of overfitting and makes it easier for attackers to craft adversarial examples that evade detection by the model.

Summary:Membership inference attacks on logistic regression models pose significant risks to data privacy and model security. Understanding these attacks and the limitations of defense mechanisms is crucial for developing robust strategies to protect against them.

Transition to next section:This concludes our exploration of membership inference attacks on logistic regression. We will now move on to discussing mitigation techniques to safeguard against these attacks.

Tips to Mitigate Membership Inference Attacks on Logistic Regression

To effectively safeguard against membership inference attacks on logistic regression models, consider implementing the following tips:

Tip 1: Data Augmentation

Incorporate synthetic or noisy data into the training dataset to reduce the model’s reliance on specific features, making it harder for attackers to infer membership.

Tip 2: Adversarial Training

Train the model on carefully crafted adversarial examples designed to evade detection. While this can improve robustness, it may slightly decrease accuracy on legitimate data.

Tip 3: Differential Privacy

Introduce noise into the model’s predictions to protect individual privacy. However, this technique can potentially degrade the model’s accuracy, especially with high noise levels.

Tip 4: Regularization Techniques

Apply regularization methods, such as L1 or L2 regularization, to penalize model complexity and prevent overfitting, which can increase vulnerability to membership inference attacks.

Tip 5: Feature Selection

Carefully select the features used in the model to minimize the risk of overfitting and reduce the potential for attackers to exploit specific feature combinations.

Tip 6: Model Ensembling

Combine multiple logistic regression models into an ensemble to improve overall robustness against membership inference attacks. This technique makes it harder for attackers to infer membership from any single model.

Tip 7: Privacy-Preserving Machine Learning Algorithms

Explore privacy-preserving machine learning algorithms, such as homomorphic encryption or secure multi-party computation, to protect data privacy during model training and inference.

Tip 8: Continuous Monitoring and Evaluation

Regularly monitor and evaluate the model’s performance and vulnerability to membership inference attacks. This allows for timely detection and implementation of additional mitigation measures as needed.

Summary:By adopting these tips, organizations can enhance the security and privacy of their logistic regression models against membership inference attacks, safeguarding sensitive data and maintaining the integrity of their machine learning applications.

Conclusion

Membership inference attacks on logistic regression models pose significant threats to data privacy and model security. These attacks exploit the model’s learned decision boundaries to infer whether an individual’s data was used in training, potentially revealing sensitive information and compromising model integrity.

Understanding the mechanisms and implications of these attacks is crucial for developing robust defense strategies. By adopting mitigation techniques such as data augmentation, adversarial training, and differential privacy, organizations can safeguard their models against these attacks and maintain the privacy of their training data.

As machine learning continues to advance, membership inference attacks will likely remain a concern. Ongoing research and collaboration are essential to develop more effective defense mechanisms and ensure the responsible use of machine learning technology.