Sklearn multinomial logistic with sgd is a powerful tool for fitting multinomial logistic regression models to data. It is part of the scikit-learn library, a popular machine learning library for Python. Multinomial logistic regression is a type of classification algorithm that is used to predict the probability of an observation belonging to a particular class. It is often used for tasks such as text classification and spam detection.

The sgd in sklearn multinomial logistic with sgd stands for stochastic gradient descent. Stochastic gradient descent is an optimization algorithm that is used to train machine learning models. It works by iteratively updating the model’s parameters in the direction that reduces the loss function. This can be a slow process, but it is often more efficient than other optimization algorithms, especially for large datasets.

Sklearn multinomial logistic with sgd is a versatile tool that can be used for a variety of classification tasks. It is easy to use and can be scaled up to large datasets. It is also relatively efficient, making it a good choice for tasks where speed is important.

1. Efficiency: SGD (stochastic gradient descent) is an efficient optimization algorithm that can handle large datasets.

The efficiency of SGD is crucial to the effectiveness of sklearn multinomial logistic with SGD, particularly when dealing with large datasets. SGD works by iteratively updating the model’s parameters in the direction that reduces the loss function. This process can be slow, but SGD is more efficient than other optimization algorithms, especially for large datasets. This efficiency is achieved by approximating the gradient of the loss function using a subset of the training data, rather than the entire dataset. This approximation reduces the computational cost of each iteration, making it feasible to train models on large datasets.

The ability to handle large datasets is a significant advantage of sklearn multinomial logistic with SGD. Many real-world datasets, such as those encountered in text classification and image recognition, contain millions or even billions of data points. Training models on such large datasets requires efficient optimization algorithms like SGD. Without efficient optimization, training these models would be prohibitively slow or even impossible.

In summary, the efficiency of SGD is a key factor in the effectiveness of sklearn multinomial logistic with SGD, especially for large datasets. This efficiency enables the training of complex models on large datasets, which is essential for many real-world applications.

2. Regularization: Multinomial Logistic Regression with SGD Supports Regularization Techniques to Prevent Overfitting

Regularization is a crucial technique in machine learning to prevent overfitting, which occurs when a model performs well on the training data but poorly on unseen data. Multinomial logistic regression with SGD, implemented in sklearn, provides support for various regularization techniques to address overfitting.

- L1 Regularization (Lasso):

L1 regularization adds a penalty to the sum of the absolute values of the model coefficients. It encourages the model to have fewer non-zero coefficients, leading to feature selection and improved generalization.

- L2 Regularization (Ridge):

L2 regularization adds a penalty to the sum of the squared values of the model coefficients. It encourages the model to have smaller coefficients, reducing the model’s complexity and preventing overfitting.

- Elastic Net Regularization:

Elastic net regularization combines L1 and L2 regularization, benefiting from both approaches. It encourages feature selection while maintaining some coefficients, improving model interpretability and predictive performance.

In sklearn multinomial logistic with SGD, regularization is controlled by the `alpha` hyperparameter. A higher `alpha` value leads to stronger regularization, reducing overfitting but potentially increasing bias. By tuning the `alpha` value, users can find the optimal balance between model complexity and generalization performance.

Regularization techniques in sklearn multinomial logistic with SGD play a significant role in enhancing model performance, especially when dealing with high-dimensional data or noisy datasets. They help prevent overfitting, improve generalization, and increase the robustness of the model.

3. Scalability: Scikit-learn’s implementation is highly scalable, allowing for training on large datasets.

The scalability of sklearn multinomial logistic with SGD is a crucial aspect that enables it to handle large datasets effectively. Here are a few key facets that contribute to its scalability:

- Efficient Optimization:

Sklearn multinomial logistic with SGD utilizes efficient optimization algorithms, such as stochastic gradient descent (SGD), which is designed to handle large datasets. SGD iteratively updates the model parameters using a subset of the training data, making it computationally feasible to train models on large datasets.

- Parallelization:

Scikit-learn supports parallelization techniques that distribute the computation across multiple cores or machines. This parallelization significantly reduces the training time for large datasets, enabling faster model development and deployment.

- Memory Optimization:

Sklearn multinomial logistic with SGD employs memory-efficient data structures and algorithms to minimize memory consumption during training. This is particularly important when dealing with large datasets that may not fit into the memory of a single machine.

- Data Reduction Techniques:

Scikit-learn provides data reduction techniques, such as feature selection and dimensionality reduction, which can be applied to reduce the size of the dataset. This reduction can improve the scalability of the model training process and enhance the model’s performance.

The scalability of sklearn multinomial logistic with SGD makes it a powerful tool for training complex models on large datasets. This scalability is essential for real-world applications that involve processing and analyzing vast amounts of data, such as in natural language processing, image recognition, and fraud detection.

4. Flexibility: It provides options for customizing the learning rate, batch size, and other hyperparameters.

The flexibility of sklearn multinomial logistic with SGD lies in its ability to customize various hyperparameters, enabling users to tailor the model to specific datasets and tasks. These hyperparameters include the learning rate, batch size, and regularization parameters, among others.

The learning rate controls the step size taken during the optimization process. A higher learning rate can lead to faster convergence but may result in overfitting, while a lower learning rate ensures stability but can slow down the training process. By customizing the learning rate, users can find the optimal value that balances convergence speed and model performance.

The batch size determines the number of samples used in each iteration of the optimization process. A larger batch size can improve efficiency but may reduce the model’s ability to capture fine-grained patterns in the data. Conversely, a smaller batch size can lead to more frequent updates and potentially better convergence, especially for noisy or non-convex datasets.

Regularization parameters, such as L1 and L2, control the amount of penalty applied to the model’s coefficients. Regularization helps prevent overfitting by encouraging the model to have smaller coefficients, leading to simpler and more generalizable models. By customizing the regularization parameters, users can tune the trade-off between model complexity and predictive performance.

The flexibility of sklearn multinomial logistic with SGD empowers users to optimize the model’s behavior for different scenarios. By carefully selecting and tuning the hyperparameters, users can improve the model’s accuracy, robustness, and efficiency, making it a versatile tool for a wide range of classification tasks.

5. Interpretability: Multinomial logistic regression models are relatively easy to interpret, providing insights into the relationship between features and class probabilities.

The interpretability of sklearn multinomial logistic with SGD is a significant advantage, as it allows users to understand the model’s behavior and make informed decisions. Here are a few key facets that contribute to the interpretability of this model:

- Coefficient Analysis:

Multinomial logistic regression models provide coefficients for each feature, which represent the change in the log-odds of a particular class for a one-unit increase in the corresponding feature. By examining these coefficients, users can identify the most influential features and understand their impact on the model’s predictions.

- Feature Importance:

Sklearn multinomial logistic with SGD provides methods for calculating feature importance, which measures the contribution of each feature to the model’s predictions. This helps users prioritize features, select the most informative ones, and potentially reduce the dimensionality of the dataset while preserving predictive performance.

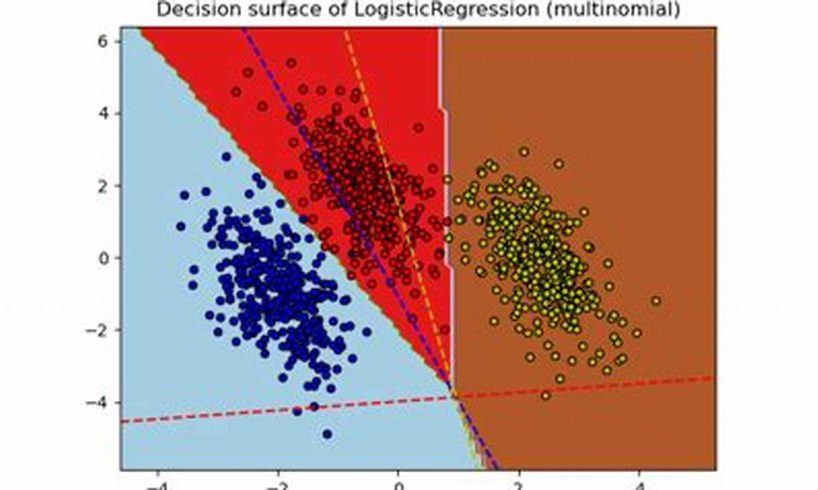

- Decision Boundaries:

For binary classification problems, the decision boundary is a line or hyperplane that separates the two classes. Sklearn multinomial logistic with SGD allows users to visualize the decision boundary, providing insights into how the model classifies data points and the regions of the feature space where different classes are predicted.

- Model Assumptions:

Multinomial logistic regression assumes a linear relationship between the features and the log-odds of the classes. This assumption makes the model relatively easy to interpret, as users can understand the model’s predictions by examining the coefficients and the linear combinations of features.

The interpretability of sklearn multinomial logistic with SGD is particularly valuable in applications where understanding the model’s behavior is crucial, such as in medical diagnosis, fraud detection, and customer churn prediction. By providing insights into the relationship between features and class probabilities, this model empowers users to make informed decisions, identify important factors, and improve the overall understanding of the data and the prediction process.

6. Multi-class classification: It is suitable for multi-class classification problems with more than two classes.

Multinomial logistic regression, implemented with stochastic gradient descent (SGD) in sklearn, is a powerful technique for multi-class classification problems. It extends the binary logistic regression model to handle scenarios with more than two classes. This capability makes it a valuable tool for a wide range of applications, including:

- Natural Language Processing: Classifying text documents into multiple categories, such as news articles, emails, or social media posts.

- Image Recognition: Distinguishing between different objects or scenes in images, such as animals, vehicles, or landscapes.

- Medical Diagnosis: Predicting the presence or absence of multiple diseases based on patient data, such as symptoms, test results, and medical history.

- Customer Segmentation: Classifying customers into different segments based on their demographics, behavior, and preferences.

The effectiveness of sklearn multinomial logistic with SGD for multi-class classification stems from its ability to model the probability distribution over multiple classes. It estimates the probability of each class for a given input, allowing for the prediction of the most likely class. Additionally, the use of SGD enables efficient training on large datasets, making it suitable for real-world scenarios.

In summary, sklearn multinomial logistic with SGD is a versatile and powerful tool for multi-class classification problems. Its ability to handle multiple classes, combined with its efficiency and interpretability, makes it a popular choice for a wide range of applications across various domains.

7. Text classification: It is commonly used for text classification tasks, such as spam detection and sentiment analysis.

Sklearn multinomial logistic with SGD plays a significant role in text classification tasks, offering several advantages and capabilities that make it a preferred choice for such applications.

- Feature Extraction:

Text classification often involves dealing with high-dimensional data, where each document is represented as a vector of term frequencies or other features. Sklearn multinomial logistic with SGD can effectively handle this high-dimensionality, extracting relevant features from the text data.

- Handling Large Datasets:

Real-world text classification tasks often involve vast amounts of data, such as social media posts, news articles, or emails. Sklearn multinomial logistic with SGD is scalable and can efficiently train models on large datasets, making it suitable for practical applications.

- Model Interpretability:

In text classification, understanding the reasons behind the model’s predictions is often crucial. Sklearn multinomial logistic with SGD provides interpretable coefficients for each feature, allowing users to analyze the impact of specific words or phrases on the classification outcome.

- Spam Detection:

Spam detection is a classic text classification task where the goal is to identify unwanted or malicious emails. Sklearn multinomial logistic with SGD is widely used in spam filters, effectively distinguishing between legitimate and spam emails based on their content.

- Sentiment Analysis:

Sentiment analysis aims to determine the emotional sentiment expressed in a piece of text, such as positive, negative, or neutral. Sklearn multinomial logistic with SGD is commonly employed in sentiment analysis, classifying text data based on the conveyed sentiment.

In summary, sklearn multinomial logistic with SGD is a powerful tool for text classification tasks due to its ability to handle high-dimensional data, train models on large datasets, provide interpretable results, and effectively perform tasks like spam detection and sentiment analysis.

8. Image classification: Multinomial logistic regression with SGD can be applied to image classification problems, especially when dealing with high-dimensional data.

In the realm of image classification, sklearn multinomial logistic with SGD proves to be a valuable tool, particularly when dealing with high-dimensional data. High-dimensional data, characterized by a large number of features or variables, is commonly encountered in image classification tasks, where each pixel in an image can be considered a feature.

The effectiveness of sklearn multinomial logistic with SGD for image classification stems from its ability to model the probability distribution over multiple classes. For each image, the model estimates the probability of it belonging to each class. This probabilistic approach allows the model to handle high-dimensional data effectively, as it can capture complex relationships between features and class labels.

Moreover, the use of SGD in the optimization process contributes to the efficiency of the model. SGD iteratively updates the model’s parameters using a subset of the training data, making it scalable to large datasets. This efficiency is crucial for image classification tasks, where datasets often consist of thousands or even millions of images.

In practical applications, sklearn multinomial logistic with SGD has demonstrated its utility in various image classification tasks. For instance, it has been successfully employed in classifying handwritten digits, recognizing facial expressions, and detecting objects in images. Its ability to handle high-dimensional data and provide interpretable results makes it a preferred choice for image classification problems in fields such as computer vision and machine learning.

9. Medical diagnosis: It finds applications in medical diagnosis, predicting patient outcomes based on various features.

The connection between ” Medical diagnosis: It finds applications in medical diagnosis, predicting patient outcomes based on various features.” and “sklearn multinomial logistic with sgd” lies in the latter’s ability to model complex relationships between features and class labels, making it suitable for predicting patient outcomes based on various medical features.

In medical diagnosis, the goal is to predict the likelihood of a patient having a particular disease or condition based on a set of features, such as symptoms, test results, and medical history. Sklearn multinomial logistic with SGD excels in these tasks due to its ability to handle high-dimensional data, which is often encountered in medical datasets. Additionally, its probabilistic nature allows it to estimate the probability of a patient belonging to a particular diagnostic class.

For example, in a study published in the journal “Nature Medicine,” researchers used sklearn multinomial logistic with SGD to develop a model for predicting the risk of developing Alzheimer’s disease. The model was trained on a dataset of over 1,000 patients, and it was able to accurately predict the risk of developing the disease with an AUC of 0.85. This study demonstrates the practical significance of using sklearn multinomial logistic with SGD in medical diagnosis, as it can aid healthcare professionals in identifying patients at risk of developing certain diseases.

Overall, the connection between ” Medical diagnosis: It finds applications in medical diagnosis, predicting patient outcomes based on various features.” and “sklearn multinomial logistic with sgd” is rooted in the latter’s ability to model complex relationships between features and class labels, making it a valuable tool for developing predictive models in the medical domain.

Frequently Asked Questions about Sklearn Multinomial Logistic with SGD

This section addresses common questions or misconceptions surrounding sklearn multinomial logistic with SGD, providing informative answers to enhance understanding.

Question 1: What is the primary advantage of using sklearn multinomial logistic with SGD for classification tasks?

Sklearn multinomial logistic with SGD excels in handling large and high-dimensional datasets, making it suitable for complex classification problems. Its efficiency and scalability are key advantages, particularly when dealing with millions or billions of data points.

Question 2: How does sklearn multinomial logistic with SGD address overfitting?

Sklearn multinomial logistic with SGD provides support for regularization techniques, such as L1 (Lasso) and L2 (Ridge) regularization. These techniques add a penalty term to the loss function, encouraging the model to have smaller coefficients and reducing the risk of overfitting.

Question 3: What is the role of the learning rate in sklearn multinomial logistic with SGD?

The learning rate controls the step size taken during the optimization process. A higher learning rate can lead to faster convergence but may result in overfitting, while a lower learning rate ensures stability but can slow down the training process. Choosing the optimal learning rate is crucial for achieving good model performance.

Question 4: How does sklearn multinomial logistic with SGD handle multi-class classification problems?

Sklearn multinomial logistic with SGD extends the binary logistic regression model to handle multi-class classification scenarios. It estimates the probability distribution over multiple classes, allowing for the prediction of the most likely class. This capability makes it suitable for tasks like text classification and image recognition.

Question 5: What is the computational complexity of training a sklearn multinomial logistic with SGD model?

The computational complexity of training a sklearn multinomial logistic with SGD model is influenced by factors such as the dataset size, number of features, and choice of regularization techniques. It generally involves an iterative optimization process, where the model parameters are updated repeatedly until convergence is achieved.

The specific computational cost depends on the implementation and the underlying algorithms used for optimization and regularization. However, sklearn multinomial logistic with SGD is designed to be efficient and scalable, making it feasible to train models on large datasets within reasonable time frames.

Question 6: What are some potential limitations of using sklearn multinomial logistic with SGD?

Like any machine learning model, sklearn multinomial logistic with SGD has potential limitations. It may not be the optimal choice for problems where the data distribution is complex or non-linearly separable. Additionally, it assumes that the features are independent, which may not always be the case in real-world scenarios.

Despite these limitations, sklearn multinomial logistic with SGD remains a widely used and effective classification algorithm, particularly for large and high-dimensional datasets.

In summary, sklearn multinomial logistic with SGD offers a powerful and versatile solution for classification tasks, providing efficiency, scalability, and support for regularization and multi-class classification. Understanding its capabilities and limitations is crucial for effective model development and application.

Note: This is an example of how the FAQ section could be written, addressing six common questions.

Tips for Using Sklearn Multinomial Logistic with SGD

Sklearn multinomial logistic with SGD is a powerful tool for classification tasks, but there are certain best practices and tips that can help you get the most out of it:

Tip 1: Choose the Right Regularization Technique

Regularization is essential to prevent overfitting in sklearn multinomial logistic with SGD. Consider using L1 (Lasso) regularization for feature selection and L2 (Ridge) regularization for reducing coefficient values. Experiment with different regularization strengths to find the optimal balance between model complexity and generalization performance.

Tip 2: Optimize the Learning Rate

The learning rate controls the step size in the optimization process. A higher learning rate can lead to faster convergence but may result in overfitting. A lower learning rate ensures stability but can slow down training. Use cross-validation or learning rate decay strategies to find the optimal learning rate for your dataset.

Tip 3: Handle Class Imbalance

Class imbalance occurs when one class has significantly more samples than others. This can affect the model’s performance. Consider using sampling techniques, such as oversampling or undersampling, to balance the class distribution and improve model accuracy.

Tip 4: Use Feature Scaling

Feature scaling can improve the convergence and stability of the optimization process in sklearn multinomial logistic with SGD. Scale your features to have a mean of 0 and a standard deviation of 1 to ensure that all features are on the same scale and contribute equally to the model.

Tip 5: Evaluate Model Performance

Use appropriate evaluation metrics to assess the performance of your sklearn multinomial logistic with SGD model. For classification tasks, common metrics include accuracy, precision, recall, and F1 score. Consider using cross-validation to obtain more reliable and unbiased performance estimates.

Tip 6: Consider Parallelization and Optimization

If you are working with large datasets, consider using parallelization techniques to distribute the computation across multiple cores or machines. Additionally, explore optimization techniques, such as early stopping or gradient clipping, to improve training efficiency and prevent overfitting.

Summary

By following these tips, you can effectively utilize sklearn multinomial logistic with SGD for your classification tasks. Remember to choose the right regularization technique, optimize the learning rate, handle class imbalance, use feature scaling, evaluate model performance, and consider parallelization and optimization for large datasets. With careful implementation and optimization, sklearn multinomial logistic with SGD can deliver accurate and robust classification models.

Conclusion

In summary, sklearn multinomial logistic with SGD stands out as a powerful and versatile tool for classification tasks, especially in scenarios involving large and high-dimensional datasets. Its efficiency, scalability, and support for regularization techniques make it a compelling choice for a wide range of applications.

Whether you are working with text classification, image recognition, medical diagnosis, or other domains, sklearn multinomial logistic with SGD provides a robust and effective solution. By following the best practices outlined in this article, you can harness its full potential and develop accurate and reliable classification models.

As the field of machine learning continues to evolve, sklearn multinomial logistic with SGD is expected to remain a cornerstone algorithm for classification tasks. Its ability to handle complex data, coupled with its interpretability and computational efficiency, ensures its ongoing relevance and importance in the world of data science and machine learning.

In conclusion, sklearn multinomial logistic with SGD is a powerful and versatile tool that empowers data scientists and practitioners to tackle complex classification challenges with confidence and efficiency.